MsdkV5版本,如何显示视频流

Completed请问一下各位大神,有没有v5版本显示视频流的demo,官方都是kotlin的,我对kotlin也不是很熟悉,而且那个FPVwidget综合了很多,我对着FPTWidget写了一个java版本的,但是怎么也显示不了视频,想问下各位大神是否有显示视频的流程,比如注册sdk成功后,我该如何让我的自定义SurfaceView控件进行数据绑定,然后显示出来,只用过v4版本的,对v5完全是小白,以下是我的代码块

package com.novasky.novaskyuavariport;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import android.Manifest;

import android.os.Bundle;

import android.os.Handler;

import android.os.Message;

import android.view.View;

import com.novasky.baselibrary.base.BaseActivity;

import com.novasky.baselibrary.eventbus.EventId;

import com.novasky.baselibrary.eventbus.GenEvent;

import com.novasky.baselibrary.utils.MPermissionHelper;

import com.novasky.novaskyuavariport.dji.base.SchedulerProvider;

import com.novasky.novaskyuavariport.dji.fpv.FPVWidget;

import com.novasky.novaskyuavariport.dji.manager.DjiSdkManager;

import com.novasky.novaskyuavariport.dji.util.DataProcessor;

import org.greenrobot.eventbus.Subscribe;

import org.greenrobot.eventbus.ThreadMode;

import java.util.Arrays;

import java.util.List;

import java.util.concurrent.TimeUnit;

import dji.sdk.keyvalue.value.common.CameraLensType;

import dji.v5.common.video.stream.PhysicalDevicePosition;

import dji.v5.common.video.stream.StreamSource;

import dji.v5.manager.datacenter.MediaDataCenter;

import dji.v5.utils.common.JsonUtil;

import dji.v5.utils.common.LogUtils;

import io.reactivex.rxjava3.disposables.CompositeDisposable;

public class MainActivity extends BaseActivity {

private String TAG = MainActivity.class.getSimpleName();

private MPermissionHelper permissionHelper;

private FPVWidget primaryFpvWidget;

private CompositeDisposable compositeDisposable;

private final DataProcessor<CameraSource> cameraSourceProcessor = DataProcessor.create(new CameraSource(PhysicalDevicePosition.UNKNOWN,

CameraLensType.UNKNOWN));

private String[] permissionArray = new String[]{

Manifest.permission.READ_EXTERNAL_STORAGE,

Manifest.permission.WRITE_EXTERNAL_STORAGE,

Manifest.permission.ACCESS_COARSE_LOCATION,

Manifest.permission.ACCESS_FINE_LOCATION

};

private Handler handler=new Handler(){

@Override

public void handleMessage(@NonNull Message msg) {

switch (msg.what){

case 0:

LogUtils.d(TAG,"初始化视频");

initVideo();

break;

}

}

};

@Override

protected int setLayoutId() {

return R.layout.activity_main;

}

@Override

protected void initView() {

primaryFpvWidget=findViewById(R.id.widget_primary_fpv);

permissionHelper = new MPermissionHelper(this);

permissionHelper.requestPermission(callBack, permissionArray);

}

@Override

protected void initListener() {

}

@Override

protected void initData() {

initVideo();

}

@Subscribe(threadMode = ThreadMode.MAIN)

public void onEventMainThread(GenEvent event) {

switch (event.getEvent()) {

case EventId.ProductConnect:

handler.sendEmptyMessageDelayed(0,1000);

break;

}

}

private void initVideo(){

primaryFpvWidget.initVideo();

MediaDataCenter.getInstance().getVideoStreamManager().addStreamSourcesListener(sources -> runOnUiThread(() -> updateFPVWidgetSource(sources)));

primaryFpvWidget.setOnFPVStreamSourceListener((devicePosition, lensType) -> {

LogUtils.i(TAG, "FPVStreamSourceListener=="+devicePosition+" ;lensType"+lensType);

cameraSourceProcessor.onNext(new CameraSource(devicePosition, lensType));

});

}

@Override

protected void onResume() {

super.onResume();

compositeDisposable = new CompositeDisposable();

// compositeDisposable.add(cameraSourceProcessor.toFlowable()

// .observeOn(SchedulerProvider.computation())

// .throttleLast(500, TimeUnit.MILLISECONDS)

// .subscribe(result -> runOnUiThread(() -> onCameraSourceUpdated(result.devicePosition, result.lensType)))

// );

}

MPermissionHelper.PermissionCallBack callBack = new MPermissionHelper.PermissionCallBack() {

@Override

public void permissionRegisterSuccess(String... permissions) {

LogUtils.i(TAG, "permissions = " + Arrays.toString(permissions));

// DjiSdkManager.getInstance().initMobileSdk(MainActivity.this);

}

@Override

public void permissionRegisterError(String... permissions) {

permissionHelper.showGoSettingPermissionsDialog(Arrays.toString(permissions));

}

};

private void updateFPVWidgetSource(List<StreamSource> streamSources) {

LogUtils.i(TAG, JsonUtil.toJson(streamSources));

if (streamSources == null) {

return;

}

}

private static class CameraSource {

PhysicalDevicePosition devicePosition;

CameraLensType lensType;

public CameraSource(PhysicalDevicePosition devicePosition, CameraLensType lensType) {

this.devicePosition = devicePosition;

this.lensType = lensType;

}

}

@Override

public boolean isEventBusEnable() {

return true;

}

}

package com.novasky.novaskyuavariport.dji.fpv;

import android.content.Context;

import android.content.res.TypedArray;

import android.util.AttributeSet;

import android.view.SurfaceHolder;

import android.view.SurfaceView;

import androidx.annotation.NonNull;

import com.novasky.baselibrary.utils.LogUtils;

import com.novasky.novaskyuavariport.R;

import com.novasky.novaskyuavariport.dji.base.DJISDKModel;

import com.novasky.novaskyuavariport.dji.base.SchedulerProvider;

import com.novasky.novaskyuavariport.dji.communication.ObservableInMemoryKeyedStore;

import com.novasky.novaskyuavariport.dji.module.FlatCameraModule;

import dji.v5.common.video.channel.VideoChannelType;

import dji.v5.common.video.decoder.DecoderOutputMode;

import dji.v5.common.video.decoder.DecoderState;

import dji.v5.common.video.decoder.VideoDecoder;

import dji.v5.common.video.interfaces.IVideoDecoder;

import dji.v5.common.video.stream.StreamSource;

import dji.v5.utils.common.JsonUtil;

import io.reactivex.rxjava3.functions.Consumer;

/**

* <pre>

* author : 小刘

* e-mail : jsliu@novasky.cn

* time : 2023/02/14

* desc :

* version: 1.0

* </pre>

*/

public class FPVWidget extends ConstraintLayoutWidget<FPVWidget.ModelState> implements SurfaceHolder.Callback {

private String TAG=FPVWidget.class.getSimpleName();

private Context context;

private int viewWidth = 0;

private int viewHeight = 0;

private int rotationAngle = 0;

private final float ORIGINAL_SCALE = 1f;

private final int LANDSCAPE_ROTATION_ANGLE = 0;

private SurfaceView fpvSurfaceView ;

private int fpvStateChangeResourceId = INVALID_RESOURCE;

private IVideoDecoder videoDecoder = null;

private FPVWidgetModel widgetModel = new FPVWidgetModel(DJISDKModel.getInstance(), ObservableInMemoryKeyedStore.getInstance(), new FlatCameraModule());

private VideoChannelType videoChannelType = VideoChannelType.PRIMARY_STREAM_CHANNEL;

private AttributeSet attrs;

public FPVWidget(Context context) {

super(context);

}

public FPVWidget(Context context, AttributeSet attrs) {

super(context, attrs);

this.context=context;

initView(context, attrs);

}

public void setVideoChannelType(VideoChannelType videoChannelType){

this.videoChannelType=videoChannelType;

widgetModel.setVideoChannelType(videoChannelType);

}

@Override

public void surfaceCreated(@NonNull SurfaceHolder surfaceHolder) {

LogUtils.i(TAG, "surfaceCreated===videoChannelType="+videoChannelType+" ;"+(videoDecoder == null));

if (videoDecoder == null) {

videoDecoder = new VideoDecoder(

context,

videoChannelType,

DecoderOutputMode.SURFACE_MODE,

fpvSurfaceView.getHolder(),

fpvSurfaceView.getWidth(),

fpvSurfaceView.getHeight(),

true

);

} else if (videoDecoder.getDecoderStatus() == DecoderState.PAUSED) {

videoDecoder.onResume();

}

}

@Override

public void surfaceChanged(@NonNull SurfaceHolder surfaceHolder, int i, int i1, int i2) {

if (videoDecoder == null) {

videoDecoder = new VideoDecoder(

context,

videoChannelType,

DecoderOutputMode.SURFACE_MODE,

fpvSurfaceView.getHolder(),

fpvSurfaceView.getWidth(),

fpvSurfaceView.getHeight(),

true

);

} else if (videoDecoder.getDecoderStatus() == DecoderState.PAUSED) {

videoDecoder.onResume();

}

if (null!=videoDecoder){

LogUtils.i(TAG, "surfaceChanged===="+ videoChannelType+" ;width="+videoDecoder.getVideoWidth()+" ;height="+videoDecoder.getVideoHeight());

}

}

@Override

public void surfaceDestroyed(@NonNull SurfaceHolder surfaceHolder) {

if (null!=videoDecoder)

videoDecoder.onPause();

}

public void updateVideoSource(StreamSource source, VideoChannelType channelType) {

LogUtils.i(TAG, "updateVideoSource: "+ JsonUtil.toJson(source)+" ;channelType="+channelType);

widgetModel.setStreamSource(source);

if (videoChannelType != channelType) {

changeVideoDecoder(channelType);

}

videoChannelType = channelType;

}

public StreamSource getStreamSource(){

return widgetModel.streamSource;

}

public void changeVideoDecoder(VideoChannelType channel) {

LogUtils.i(TAG, "changeVideoDecoder:"+ channel);

if (null!=videoDecoder){

videoDecoder.setVideoChannelType(channel);

}

fpvSurfaceView.invalidate();

}

public void setOnFPVStreamSourceListener(FPVStreamSourceListener listener) {

widgetModel.streamSourceListener = listener;

}

public void setSurfaceViewZOrderOnTop(Boolean onTop){

fpvSurfaceView.setZOrderOnTop(onTop);

}

public void setSurfaceViewZOrderMediaOverlay(Boolean isMediaOverlay){

fpvSurfaceView.setZOrderMediaOverlay(isMediaOverlay);

}

//endregion

//region Helpers

private void setViewDimensions(int measuredWidth,int measuredHeight) {

viewWidth = measuredWidth;

viewHeight = measuredHeight;

}

@Override

protected void initView(Context context, AttributeSet attrs) {

inflate(context, R.layout.uxsdk_widget_fpv, this);

fpvSurfaceView=findViewById(R.id.surface_view_fpv);

this.attrs=attrs;

}

public void initVideo(){

if (!isInEditMode()) {

fpvSurfaceView.getHolder().addCallback(this);

rotationAngle = LANDSCAPE_ROTATION_ANGLE;

}

if (null!=widgetModel){

widgetModel.setVideoChannelType(videoChannelType);

}

if (null!=attrs){

initAttributes(context, attrs);

}

}

private void initAttributes(Context context,AttributeSet attrs) {

LogUtils.d(TAG,"initAttributes=====");

TypedArray typedArray=context.obtainStyledAttributes(attrs, R.styleable.FPVWidget);

if (null!=typedArray){

if (!isInEditMode()){

int videoChannel=typedArray.getInt(R.styleable.FPVWidget_uxsdk_videoChannelType,0);

videoChannelType = (VideoChannelType.find(videoChannel));

if (null!=widgetModel){

widgetModel.setVideoChannelType(videoChannelType);

widgetModel.initStreamSource();

if (null!=widgetModel.streamSource){

updateVideoSource(widgetModel.streamSource,videoChannelType);

}

}

}

}

}

@Override

protected void onAttachedToWindow() {

super.onAttachedToWindow();

if (!isInEditMode()) {

if (null!=widgetModel)

widgetModel.setup();

}

initializeListeners();

}

@Override

protected void onDetachedFromWindow() {

destroyListeners();

if (!isInEditMode()) {

if (null!=widgetModel)

widgetModel.cleanup();

}

videoDecoder.destroy();

videoDecoder = null;

super.onDetachedFromWindow();

}

private void initializeListeners(){

//后面补上

}

private void destroyListeners() {

//后面补上

}

private void delayCalculator() {

//后面补充

}

@Override

protected void reactToModelChanges() {

addReaction(widgetModel.getCameraNameProcessor().toFlowable()

.observeOn(SchedulerProvider.ui())

.subscribe(new Consumer<String>() {

@Override

public void accept(String s) throws Throwable {

// updateCameraName(s);

}

}));

addReaction(widgetModel.getCameraSideProcessor().toFlowable()

.observeOn(SchedulerProvider.ui())

.subscribe(new Consumer<String>() {

@Override

public void accept(String s) throws Throwable {

// updateCameraSide(s);

}

}) );

addReaction(widgetModel.getHasVideoViewChanged()

.observeOn(SchedulerProvider.ui())

.subscribe(new Consumer<Boolean>() {

@Override

public void accept(Boolean aBoolean) throws Throwable {

if (aBoolean){

// delayCalculator();

}

}

}));

}

/**

* Class defines the widget state updates

*/

class ModelState{

}

}

package com.novasky.novaskyuavariport.dji.fpv;

import androidx.annotation.NonNull;

import com.novasky.baselibrary.utils.LogUtils;

import com.novasky.novaskyuavariport.dji.base.DJISDKModel;

import com.novasky.novaskyuavariport.dji.base.ICameraIndex;

import com.novasky.novaskyuavariport.dji.base.WidgetModel;

import com.novasky.novaskyuavariport.dji.communication.ObservableInMemoryKeyedStore;

import com.novasky.novaskyuavariport.dji.module.FlatCameraModule;

import com.novasky.novaskyuavariport.dji.util.CameraUtil;

import com.novasky.novaskyuavariport.dji.util.DataProcessor;

import java.util.List;

import dji.sdk.keyvalue.key.CameraKey;

import dji.sdk.keyvalue.key.KeyTools;

import dji.sdk.keyvalue.msdkkeyinfo.KeyCameraVideoStreamSource;

import dji.sdk.keyvalue.value.camera.CameraOrientation;

import dji.sdk.keyvalue.value.camera.CameraVideoStreamSourceType;

import dji.sdk.keyvalue.value.camera.VideoResolutionFrameRate;

import dji.sdk.keyvalue.value.common.CameraLensType;

import dji.sdk.keyvalue.value.common.ComponentIndexType;

import dji.v5.common.utils.RxUtil;

import dji.v5.common.video.channel.VideoChannelType;

import dji.v5.common.video.interfaces.IVideoChannel;

import dji.v5.common.video.stream.StreamSource;

import dji.v5.manager.datacenter.MediaDataCenter;

import dji.v5.utils.common.JsonUtil;

import io.reactivex.rxjava3.core.Flowable;

import io.reactivex.rxjava3.disposables.Disposable;

import io.reactivex.rxjava3.functions.Consumer;

/**

* <pre>

* author : 小刘

* e-mail : jsliu@novasky.cn

* time : 2023/02/14

* desc :

* version: 1.0

* </pre>

*/

public class FPVWidgetModel extends WidgetModel implements ICameraIndex {

private String TAG=FPVWidgetModel.class.getSimpleName();

private FlatCameraModule flatCameraModule;

private CameraLensType currentLensType = CameraLensType.CAMERA_LENS_DEFAULT;

private DataProcessor<CameraVideoStreamSourceType> streamSourceCameraTypeProcessor = DataProcessor.create(CameraVideoStreamSourceType.UNKNOWN);

private DataProcessor<CameraOrientation> orientationProcessor = DataProcessor.create(CameraOrientation.UNKNOWN);

private DataProcessor<VideoResolutionFrameRate> resolutionAndFrameRateProcessor = DataProcessor.create(new VideoResolutionFrameRate());

private DataProcessor<String> cameraNameProcessor = DataProcessor.create("");

private DataProcessor<String> cameraSideProcessor = DataProcessor.create("");

private DataProcessor<Boolean> videoViewChangedProcessor = DataProcessor.create(false);

public FPVStreamSourceListener streamSourceListener = null;

public VideoChannelType videoChannelType = VideoChannelType.PRIMARY_STREAM_CHANNEL;

/**

* The current camera index. This value should only be used for video size calculation.

* To get the camera side, use [FPVWidgetModel.cameraSide] instead.

*/

private ComponentIndexType currentCameraIndex = ComponentIndexType.LEFT_OR_MAIN;

public StreamSource streamSource=null;

private Flowable<Boolean> hasVideoViewChanged;

protected FPVWidgetModel(@NonNull DJISDKModel djiSdkModel, @NonNull ObservableInMemoryKeyedStore uxKeyManager,FlatCameraModule flatCameraModule) {

super(djiSdkModel, uxKeyManager);

this.flatCameraModule=flatCameraModule;

init();

}

public void setVideoChannelType(VideoChannelType videoChannelType) {

this.videoChannelType = videoChannelType;

}

protected FPVWidgetModel(@NonNull DJISDKModel djiSdkModel, @NonNull ObservableInMemoryKeyedStore uxKeyManager) {

super(djiSdkModel, uxKeyManager);

init();

}

private void init(){

addModule(flatCameraModule);

}

@Override

public ComponentIndexType getCameraIndex() {

return currentCameraIndex;

}

@Override

public CameraLensType getLensType() {

return currentLensType;

}

@Override

public void updateCameraSource(ComponentIndexType cameraIndex, CameraLensType lensType) {

}

public void initStreamSource(){

streamSource = getVideoChannel().getStreamSource();

}

@Override

protected void inSetup() {

LogUtils.d(TAG,"inSetup()=======");

if (null!=streamSource){

currentCameraIndex = CameraUtil.getCameraIndex(streamSource.getPhysicalDevicePosition());

Consumer videoViewChangedConsumer=new Consumer() {

@Override

public void accept(Object o) {

videoViewChangedProcessor.onNext(true);

}

};

bindDataProcessor(KeyTools.createKey(CameraKey.KeyCameraOrientation, currentCameraIndex), orientationProcessor, videoViewChangedConsumer);

bindDataProcessor(KeyTools.createKey(CameraKey.KeyCameraVideoStreamSource, currentCameraIndex), streamSourceCameraTypeProcessor);

// CameraVideoStreamSourceType cameraVideoStreamSourceType=KeyTools.createCameraKey();

//// CameraVideoStreamSourceType cameraVideoStreamSourceType= bindDataProcessor(CameraKey.KeyCameraVideoStreamSource.create(currentCameraIndex), streamSourceCameraTypeProcessor);

// if (null!=cameraVideoStreamSourceType){

// switch (cameraVideoStreamSourceType){

// case WIDE_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_WIDE;

// break;

// case ZOOM_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_ZOOM;

// break;

// case INFRARED_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_THERMAL;

// break;

// case NDVI_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_MS_NDVI;

// break;

// case MS_G_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_MS_G;

// break;

// case MS_R_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_MS_R;

// break;

// case MS_RE_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_MS_RE;

// break;

// case MS_NIR_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_MS_NIR;

// break;

// case RGB_CAMERA:

// currentLensType=CameraLensType.CAMERA_LENS_RGB;

// break;

// default:

// currentLensType=CameraLensType.CAMERA_LENS_DEFAULT;

// break;

// }

bindDataProcessor(KeyTools.createCameraKey(CameraKey.KeyVideoResolutionFrameRate,currentCameraIndex, currentLensType), resolutionAndFrameRateProcessor);

Disposable disposable= flatCameraModule.cameraModeDataProcessor.toFlowable().doOnNext(videoViewChangedConsumer).subscribe(new Consumer() {

@Override

public void accept(Object o) throws Throwable {

LogUtils.d(TAG,"camera mode: ");

}

});

addDisposable(disposable);

sourceUpdate();

// }

}

LogUtils.i(TAG, "inSetup,streamSource:"+ JsonUtil.toJson(streamSource)+" ;currentCameraIndex="+ currentCameraIndex);

}

@Override

protected void inCleanup() {

currentLensType=CameraLensType.CAMERA_LENS_DEFAULT;

}

private void sourceUpdate() {

updateCameraDisplay();

onStreamSourceUpdated();

}

public void updateStates() {

//无需实现

}

private void updateCameraDisplay() {

if (null!=streamSource){

String cameraName = streamSource.getPhysicalDeviceCategory().name() + "_" + streamSource.getPhysicalDeviceType().getDeviceType();

if (currentLensType != CameraLensType.CAMERA_LENS_DEFAULT) {

cameraName = cameraName + "_" + currentLensType.name();

}

cameraNameProcessor.onNext(cameraName);

cameraSideProcessor.onNext(streamSource.getPhysicalDevicePosition().name());

}

}

private void onStreamSourceUpdated() {

if (null!=streamSource){

streamSourceListener.onStreamSourceUpdated(streamSource.getPhysicalDevicePosition(), currentLensType);

}

}

private IVideoChannel getVideoChannel(){

List<StreamSource> list= MediaDataCenter.getInstance().getVideoStreamManager().getAvailableStreamSources();

List<IVideoChannel> channels= MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannels();

// LogUtils.d(TAG,"获取视频源通道:"+(null!=MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType)?MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType):"null"));

return MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType);

}

public DataProcessor<String> getCameraNameProcessor() {

return cameraNameProcessor;

}

public DataProcessor<String> getCameraSideProcessor() {

return cameraSideProcessor;

}

public Flowable<Boolean> getHasVideoViewChanged() {

hasVideoViewChanged=videoViewChangedProcessor.toFlowable();

return hasVideoViewChanged;

}

public void setStreamSource(StreamSource streamSource) {

this.streamSource = streamSource;

restart();

}

}

-

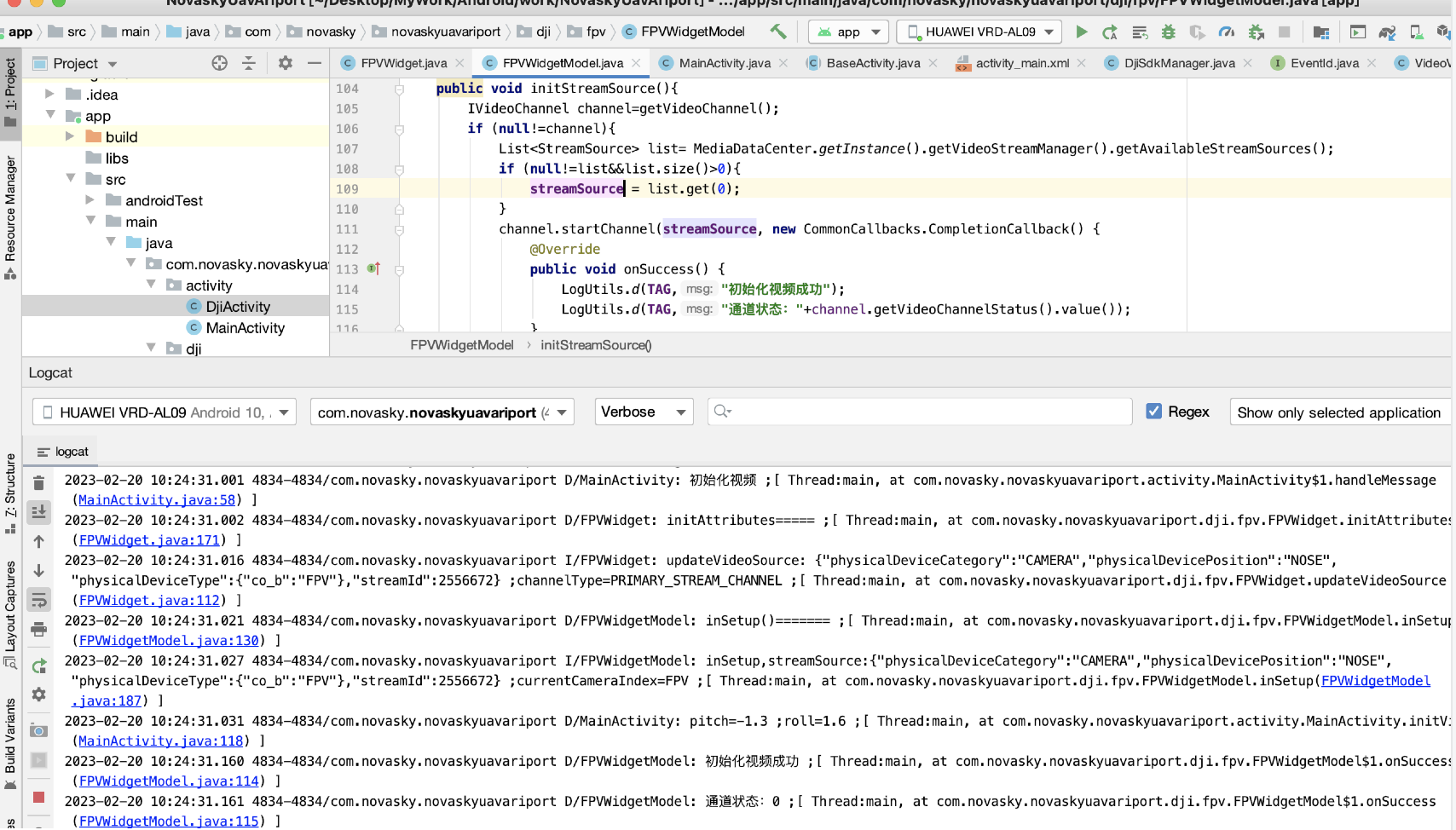

关键是我如果在mainActivity里面注册成功后,等待接收到设备连接成功结果时去执行mainActivity中的initVideo方法,如下图所示

private void initVideo(){

primaryFpvWidget.initVideo();

MediaDataCenter.getInstance().getVideoStreamManager().addStreamSourcesListener(sources -> runOnUiThread(() -> updateFPVWidgetSource(sources)));

primaryFpvWidget.setOnFPVStreamSourceListener((devicePosition, lensType) -> {

LogUtils.i(TAG, "FPVStreamSourceListener=="+devicePosition+" ;lensType"+lensType);

cameraSourceProcessor.onNext(new CameraSource(devicePosition, lensType));

});

}然后我打断点去执行了FPVwidget中的如下方法

public void initVideo(){

if (!isInEditMode()) {

fpvSurfaceView.getHolder().addCallback(this);

rotationAngle = LANDSCAPE_ROTATION_ANGLE;

}

if (null!=widgetModel){

widgetModel.setVideoChannelType(videoChannelType);

}

if (null!=attrs){

initAttributes(context, attrs);

}

}

private void initAttributes(Context context,AttributeSet attrs) {

LogUtils.d(TAG,"initAttributes=====");

TypedArray typedArray=context.obtainStyledAttributes(attrs, R.styleable.FPVWidget);

if (null!=typedArray){

if (!isInEditMode()){

int videoChannel=typedArray.getInt(R.styleable.FPVWidget_uxsdk_videoChannelType,0);

videoChannelType = (VideoChannelType.find(videoChannel));

if (null!=widgetModel){

widgetModel.setVideoChannelType(videoChannelType);

widgetModel.initStreamSource();

if (null!=widgetModel.streamSource){

updateVideoSource(widgetModel.streamSource,videoChannelType);

}

}

}

}

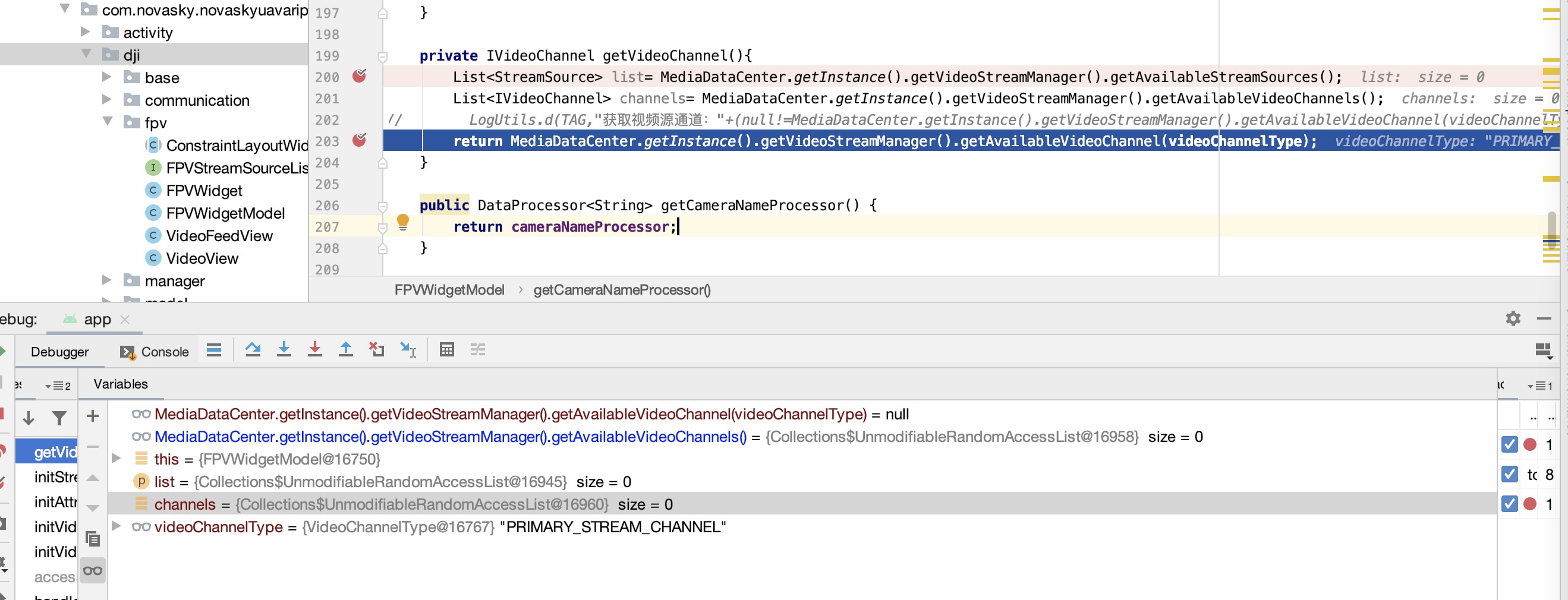

}但是在这里面的widgetModel.initStreamSource();时就报空指针了,我断点调试发现底下不管时获取StreamSource集合或者IVideoChannel集合都是为空的,然后MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType)返回来也是空的,如下图所示

private IVideoChannel getVideoChannel(){

List<StreamSource> list= MediaDataCenter.getInstance().getVideoStreamManager().getAvailableStreamSources();

List<IVideoChannel> channels= MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannels();

// LogUtils.d(TAG,"获取视频源通道:"+(null!=MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType)?MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType):"null"));

return MediaDataCenter.getInstance().getVideoStreamManager().getAvailableVideoChannel(videoChannelType);

}

-

我使用MSDK 5.2在mavic 3上进行验证,基本上onProductConnect一回调,onStreamSourceUpdate就回调了获取到的视频源信息。

Please sign in to leave a comment.

Comments

14 comments