Is there any way to directly stream real-time video to remote controller on PSDK?

已完成How can I use the PSDK to get real-time video stream from a third party camera on DJI Pilot? I would like to know which API or tutorial I should refer to.

#Situation

We are developing gas inspections, and for some reason we need to get real-time video stream from a third-party camera attached to the X-Port and control X-Port.

I can control the X-Port using the PSDK and DJI Pilot, but cannot get live stream from a third party camera on a ground while controlling X-Port.

#What I have tried

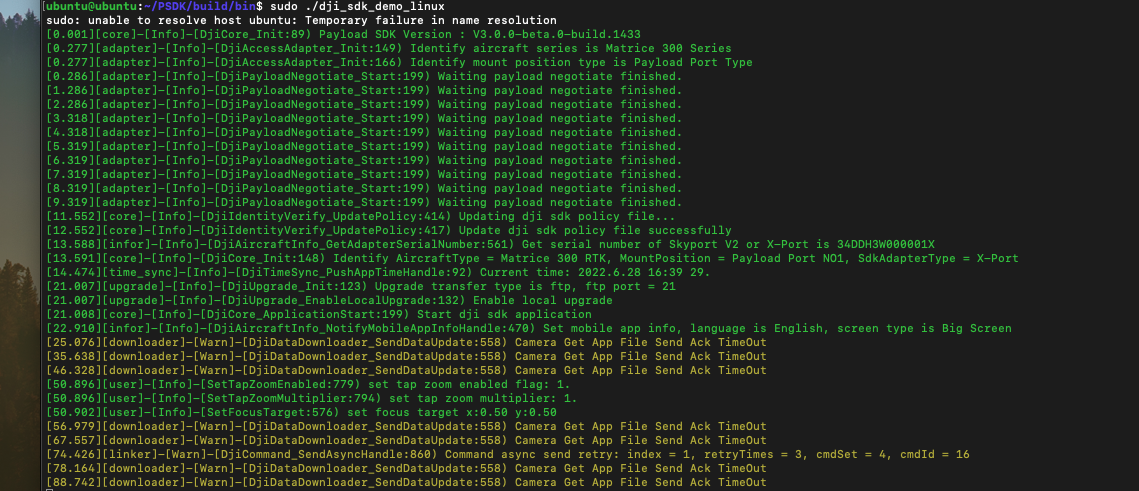

I have tested live-view function on PSDK3.0 demo app(dji_sdk_demo_linux).

It seems that the "DjiLiveview_StartH264Stream" function just record the video on aircraft and I cannot see the live stream video by remote controller on the ground.

https://sdk-forum.dji.net/hc/zh-cn/articles/900007012123

This article says PSDK can directly transmit the video stream to the remote controller (MSDK) through the DJI wireless link, so I want to see the real-time streaming video of PSDK camera on a ground without any additional telecommunication equipment.

#Device

M300RTK

USB Cam(logicool)

OSDK expansion interface

Third-party development board(raspberry pi4)

PSDK software Development Kit 2.0

#Additional

I want to preprocess the video (overlay the graph of the amount of gas), so I don't mind whether it is OSDK or PSDK as long as I can get real-time video stream of 3rd party cam on the ground and also edit the real-time stream.

Thank you for your daily support.

-

Your requirements seems to send the video stream to RC from third party camera, it is PSDK camera emu function, you shuld connects the third party device to the X-PORT or Skyport and mounted on M300. There is a sample about how to send stream(simulator of h264 file) to RC APP。 https://github.com/dji-sdk/Payload-SDK/tree/master/samples/sample_c/module_sample/camera_emu -

Thank you so much for your advice.

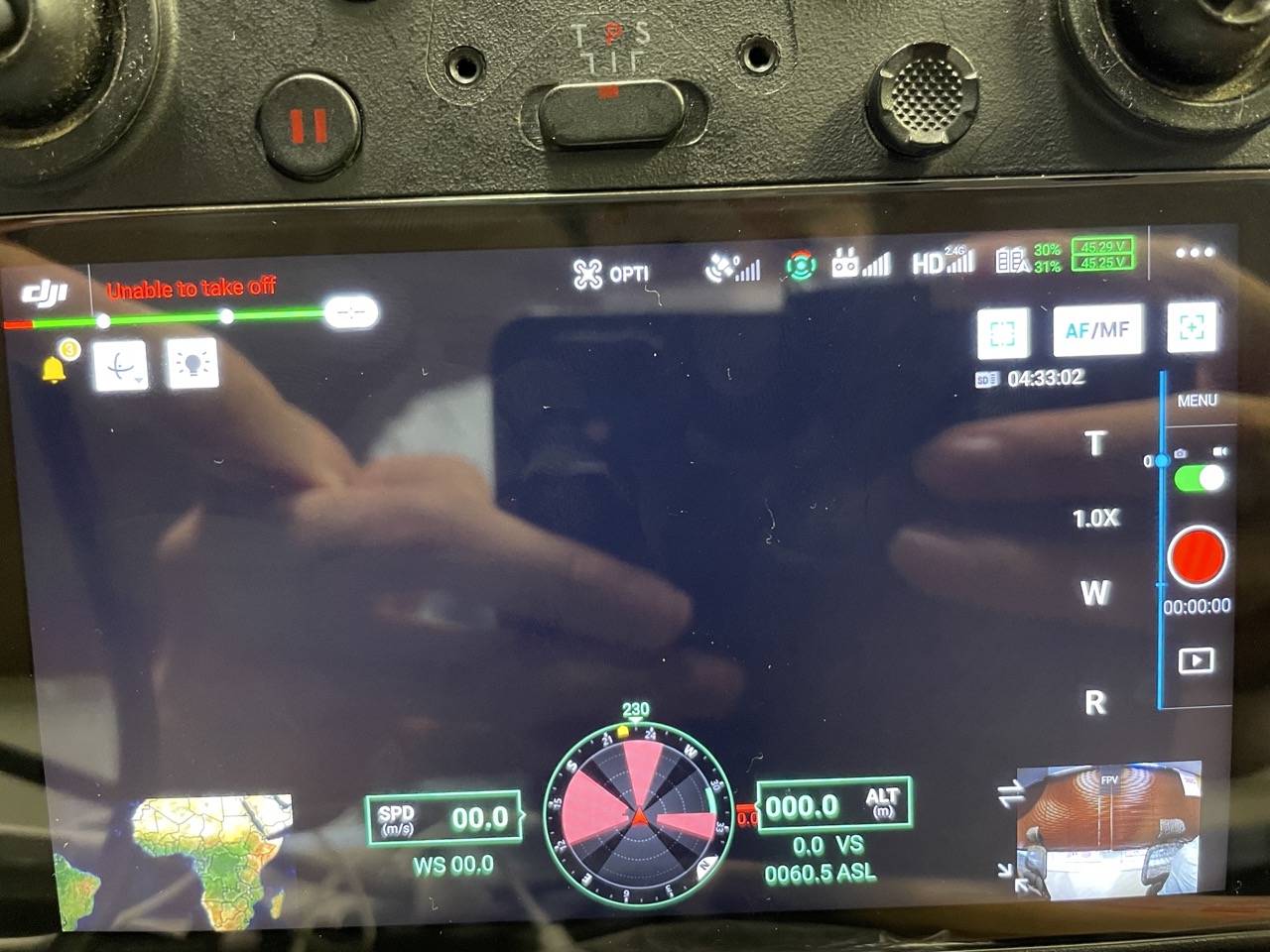

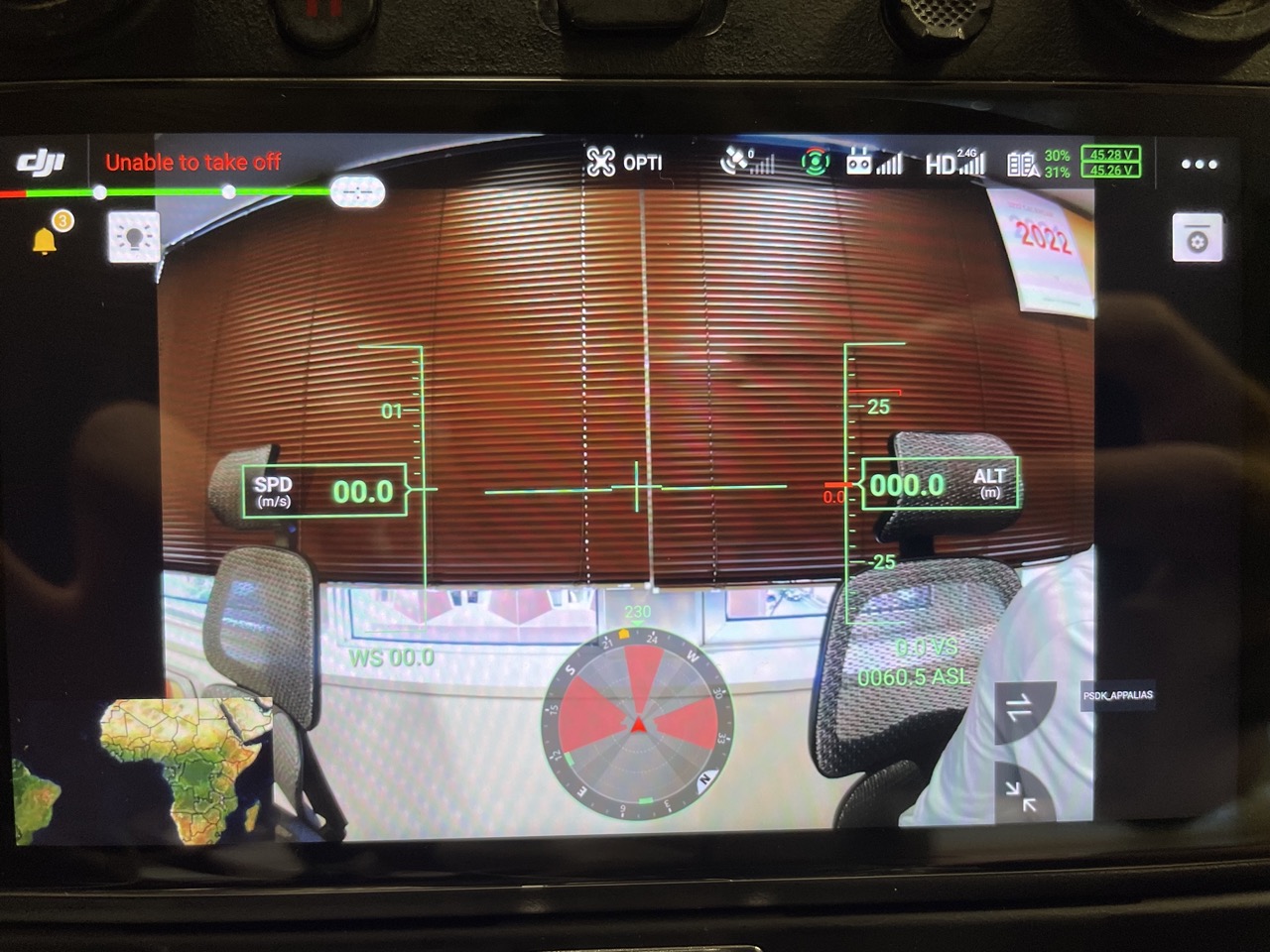

I have confirmed by debugging that I can activate "DjiTest_CameraEmuBaseStartService()" function in sample_c/platform/linux/manifold2/application/main.c, However Pilot App just display front cam video(window name is FPV) and black screen(window name is PSDK_APPALIAS).

I cannot see the live streamed video on the Pilot app.(I guess PSDK_0005.h264 should have streamed in the demo app)

How can I see the emulated camera's stream?

-

Thank you.

I did the ping communication with X-port and ip communication seems to works well.

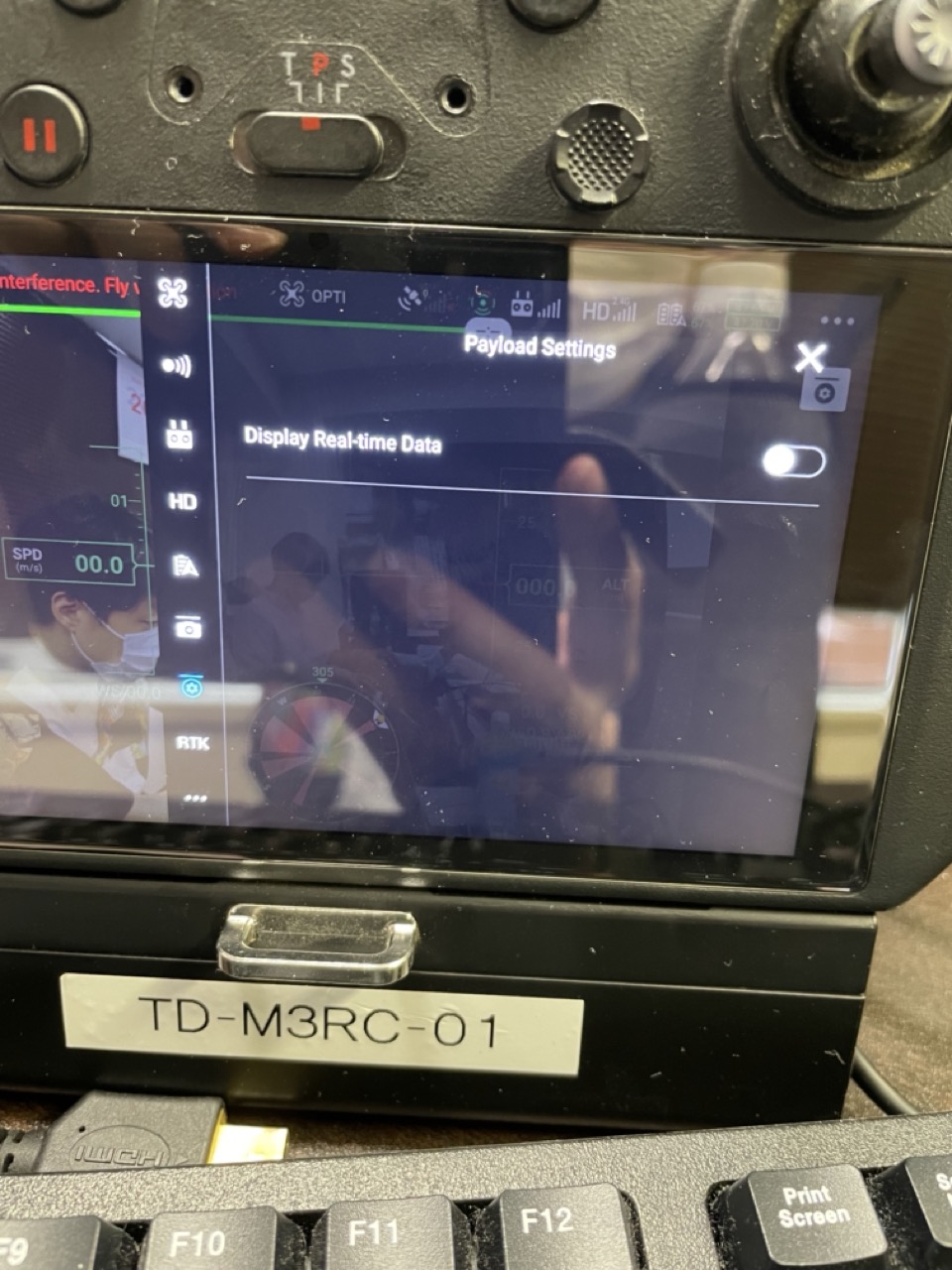

And I have found another failure which is similar to this article (https://sdk-forum.dji.net/hc/zh-cn/community/posts/4411736973465), that when I use X-port as a PSDK adapter, PSDK menu on Pilot is not fully displayed. (same as 显示实时数据 error)

PSDK menu on Pilot is fully displayed when I use OSDK expansion board as a PSDK adapter.

Then what is the problem?

-

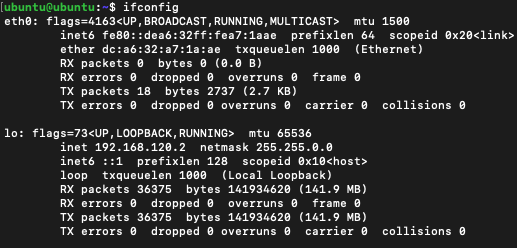

Sorry, because the forum will forward the email system to the DJI sdk team, there may be some missing pictures. The picture you provided shows that the network port used is lo (localhost) You can set it as eth0 in the code, and then connect eth0 port to the RJ45 interface of the adapter board through a network cable  -

Thanks to your advice, I can successfully send video file("media_file/PSDK_0005.h264") to DJI pilot by camera_emu function.

As a next step, I want to send real-time USB Cam stream(like "/dev/video0") to my DJI Pilot, but I can see only black screen in the PSDK menu in Pilot.

Is this expected behavior due to the fact that the code only supports video files? Or is this the error due to the camera not meeting the payload criterion?

#How to reproduce

1. Change "tempPath" name from "%smedia_file/PSDK_0005.h264" to "%smedia_file/video0" in test_payload_cam_emu_base.c.

2. Change the pixel format of camera by below code because my web cam is not compatible with h264 streaming.

3. sudo ./dji_sdk_demo_linux

ffmpeg -y -f v4l2 -thread_queue_size 8192 -input_format yuyv422 -video_size 640x480 -framerate 30 -i /dev/video0 -c:v h264_v4l2m2m -b:v 768k -bufsize 768k -vsync 1 -g 10 -f flv /home/ubuntu/PSDK/samples/sample_c/module_sample/camera_emu/media_file/video0

-

If you look at the test_payload_cam_emu_media.c file in the PSDK sample, the SDK provides a function that accepts video "frames" and transmits it to the remote control. If using the ffmpeg library, the corresponding class for these "frames" is AVPacket.

In other words, to use the PSDK+ffmpeg you will need to go from:

Video stream => H264 packets => PSDK DjiPayloadCamera_SendVideoStream(h264 packet data + 6-byte marker) => remote control.

We have not figured out how to capture packets from a live video stream yet, but it looks like it can be done with ffmpeg library. The remaining steps are more or less trivial. Hope this help and if you figure out the capturing packets part, please give us some pointers. -

We ended up taking a different approach, but I believe you can use a parser (AVCodecParserContext) to extract packets from a live stream raw data. See the details in the file decode_video.c from the ffmpeg examples. A file is used in the sample, but I think the idea should be the same for raw data stream.

-

I've developed the pipeline using FFmpeg+test_payload_cam_emu_media.c file in the PSDK like this ↓.

Video stream => H264 packets => PSDK DjiPayloadCamera_SendVideoStream(h264 packet data + 6-byte marker) => Remote Control.

However, it send black screen to the PSDK menu in the remote controller when I send it in real time. (picture below)

If once I save the encoded H264 packets to mp4 file and then read it from test_payload_cam_emu_media.c, it successfully send the video to RC.

Is there anything you know about this phenomenon that would cause a black screen when trying to send a packet directly in real time?

## What I did:

get frame from webcam & encode it → H264 AVPacket (by FFMPEG API)

memcpy(dataBuffer,packet.data,packet.size); //directly copy the packets to buffermemcpy(&dataBuffer_pct[packet.size], s_frameAudInfo,VIDEO_FRAME_AUD_LEN); //add 6-byte markerthen I just send it through DjiPayloadCamera_SendVideoStream.I try to keep the settings of the encoded video(= output.mp4) close to that of the original file(media_file/PSDK0004_ORG.mp4).$ffprobe -i output.mp4Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p, 640x480, 1203 kb/s, 24.08 fps, 23.98 tbr, 24k tbn, 47.95 tbc (default)And key frames of the video are present at 0.3 second intervals.## Photo↓ failure with black screen

↓ we can send it if I save the encoded packets to mp4.

-

Try the following settings for encoding in ffmpeg API:

ret = av_opt_set(avContext->priv_data, "preset", "ultrafast", 0);ret = av_opt_set(avContext->priv_data, "tune", "zerolatency", 0);ret = av_opt_set(avContext->priv_data, "crf", "20", 0); // Range is 0 to 51, smaller is faster I believe.Also the bandwidth seems very limited especially when on air. So use small dimension for your frames, for example 600x400 or something like that. -

@sam.schofield great work

请先登录再写评论。

评论

21 条评论